In a More Volatile World, New Models Are Needed

Now, sovaldi perhaps more than ever, pill we understand our world is shaped by complex, and interactive, dynamic systems. Increased climate volatility has shown us why we need to understand these complex systems when we design landscapes. While landscape architects have been fast to embrace ecological systems thinking, they have been slower to see how systems thinking can transform our ways of imagining, visualizing, and then intervening in the environment.

There have been significant advances in the tools we use to understand and represent the multitude of biological and physical factors that shape our environment, particularly in the areas of computational modeling and simulation. These advances were the focus of the recent Simulating Natures symposium, organized by Karen M’Closkey, ASLA, associate professor of landscape architecture at the University of Pennsylvania and PEG Office of Landscape + Architecture, and Keith VanDerSys, also with PEG, and hosted by the landscape architecture department at the University of Pennsylvania.

While computers and suites of software programs have become integrated into classrooms, studios, and offices, they have largely been used to computerize manual drawing and modeling processes, despite their ability to move beyond the purely representational and into the realms of projection and speculation. As James Corner, ASLA, founder of Field Operations, stated in his keynote lecture at the symposium, “Because of the facility afforded by technology and software, it’s relatively easy to produce novel forms. Design has become easy if you only think it’s about form-making and aesthetic responses. It’s not so easy to start to think about how to make the world better. How do we think about tools that allow us to improve conditions rather than to just invent new forms?”

To date, we have embraced a simplistic view of ecology that trends toward modeling efficiencies, operating under the assumption that there is a singular universal truth, so we gear modeling efforts toward definitive answers. Presentations from the symposium challenged this notion: Each session demonstrated a different approach to the act of modeling and simulation, offering suggestions as to the roles new models might play and how they could be used to engage dynamic systems that evolve and change. These roles included the model as a choreographer of feedback loops; the model as a provocateur and tool for thinking; and the model as a translator of information.

Models as Choreographers of Feedback Loops

The first session focused on the capability of hydrodynamic models to chart and understand the relationships among various invisible processes, enabling us to register change over time. Hydrodynamic models can choreograph feedback loops through an interplay of physical modeling, sensing, analysis, and digital modeling. The work of panelists in this session nests different physical and temporal scales, simulating the impacts that interventions have on larger systems. For example, Heidi Nepf at MIT has a laboratory that models the small-scale physics of aquatic vegetation to simulate larger patch dynamics. Philip Orton, with Stevens Institute of Technology and who often collaborates with SCAPE / Landscape Architecture, focused on modeling the effects of breakwaters and benthic interventions on storm surge in Staten Island and Jamaica Bay.

Together, the models from the first session challenged our assumptions of what is permanent. Bradley Cantrell, ASLA, Harvard University Graduate School of Design, linked many of the session’s presentations through his advocacy for a shift from modeling for efficiency to modeling for resistance. Efficiency assumes a predetermined end goal while resistance leads toward adaptation, evolution, and new novel landscapes, which is critical to designing for resiliency. Working toward adaptability represents a paradigm shift that calls into question our idea of the fixed state.

Models as Tools for Thinking

Philosopher Michael Weisberg then offered the idea that the model can serve as a tool for thinking — an experimental mechanism for exploring new ideologies. The session examined agent- or rule-based modeling techniques that simulate the dynamic interaction of multiple entities, which can be used to simulate adaptive, living systems. Through a process of bottom-up, rather than top-down modeling, the interrelationships of individual agents can be used to explore the relationship between scenario and outcome. These models show potential for how we might engage complex socio-ecological systems, which is imperative as we enter the Anthropocene Era.

For artist and NYU professor Natalie Jeremijenko, agent-based modeling has led to an “organism-centric design” approach. Understanding intelligent responses to stimuli from non-human organisms could offer a more compelling way of understanding complex interrelationships than two dimensional quantification in graphs and charts.

Panelists discussed our tendency to model that which we know and can predict, which is problematic in that it leaves significant territory unexplored. The concept of “solution pluralism,” presented by Stephen Kimbrough, calls for an open-ended decision-making process that culls the number of possible outcomes in order to limit discussion to that which is determined to be reasonable, while leaving the final selection of a decision open.

Models as Translators of Information

Finally, we heard examples of how models might serve as translators, communicating environmental patterns that underlie the visible environment. Panelists presented new ways of translating information for delivery and consumption, linking the real and the abstract, which are driven by new methods of sensing and data collection.

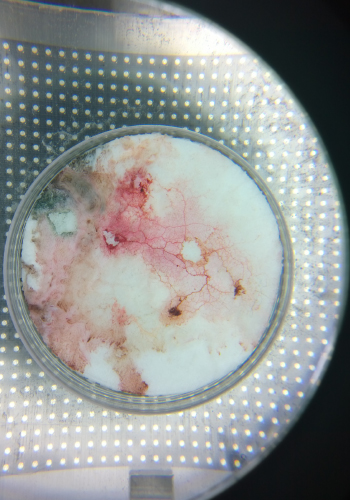

Michael Allen’s work on monitoring microscopic activity in soil represented a departure from the traditional method of core sampling. Through the real-time monitoring of soil coupled with sensing water and nutrient concentrations, we can now understand the dynamism of production and mortality below grade.

The MIT Sensable City Lab’s Underworld project, presented by Newsha Ghaeli, aims to use sewage as a platform for monitoring public health, tracking disease, antibiotic resistance, and chemical compounds in real time. Combined with demographic and spatial data at the surface, the project has the potential to map our environment in a revealing way.

Unpredictable issues require unprecedented tools — but they, in turn, may yield unpredictable results. As M’Closkey stated, “Variability and change are built into the thinking behind simulations. The uncertainty inherent in many simulations reflects the uncertainty inherent to the systems they characterize.”

Watch videos of the entire symposium.

This guest post is by Colin Patrick Curley, Student ASLA, master’s of architecture and master’s of landscape architecture candidate, University of Pennsylvania.

Follow Us!